Climbing Stillwater’s Main Street Stairs.

Off to Stillwater area this morning. Starting with breakfast at Mon Petit Chéri.

It seems that Affogatos are all of a sudden popular at a number of different places. Sadly, most places that have good ice cream have terrible coffee — and vice versa. However I was very impressed with Sebastian Joe’s delicious rendition — if only they didn’t put it in a paper cup. 🍦☕️

We walked over to the Linden Hills Fall Festival to check out the 50th annual event. It was a nice neighborhood event but the main thing that we noted was Cal Pflum playing on the stage. He is very young but has a really great voice!

We found the Illuminati. 😁

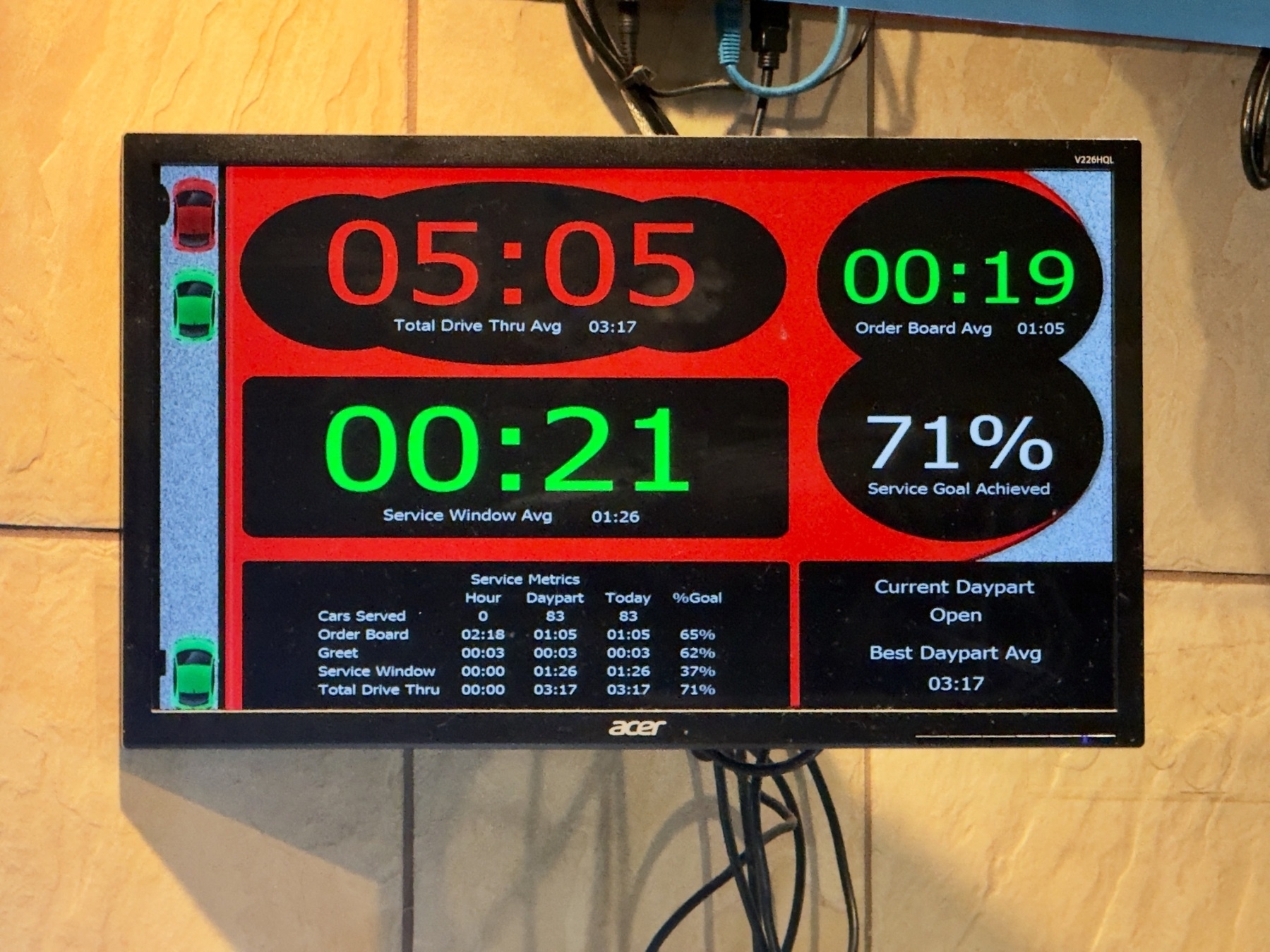

Drive-thru metrics. Needs some sparklines. Too many numbers, not enough easy to read lines.

After following Tyler to Pokémon TCG Pocket I decided to also try one of his favorite games — Clash Royale. I’m a total newbie. If you play let’s connect!

Trying out Pokémon TCG Pocket

I saw that Pokémon TCG Pocket has a special event coming up and Tyler has been collecting on this app for a long-time so I decided to join in as well.

If you want to connect with me my friend code is 2059-0580-7070-6014.

An observation on TCG Pocket — if you owned Pokémon and had a huge market of collectible cards and believed there was a future for digital collectibles, like NFTs, this app is exactly what you would create. You have a brand new ecosystem of digital only cards to get people used to all the typical things you do with the current physical cards and create a whole additional digital ecosystem. It isn’t blockchain based today, but the consumer behavior is the thing to focus on now how it is done.

I just got my first CharGPT Pulse. I was more impressed than I expected. It was truly interesting. Curious to see how it changes each day.

With a market cap of $4 trillion the 5% move in NVDA shares led a $200 billion increase in market cap — double the $100 billion investment.

Nvidia, a chipmaker, signed a deal to invest as much as $100bn in OpenAI, an artificial-intelligence firm, to build data centres with a capacity of 10 gigawatts. Nvidia will reportedly receive equity in OpenAI in return. The firms said the deal would power advanced AI. More than 700m people use OpenAI each week. Shares in Nvidia were up nearly 5% on Monday. — The Economist, The World in Brief, September 23 2025

You gotta spend money to make money?

Ready for Analyst Day to get going!

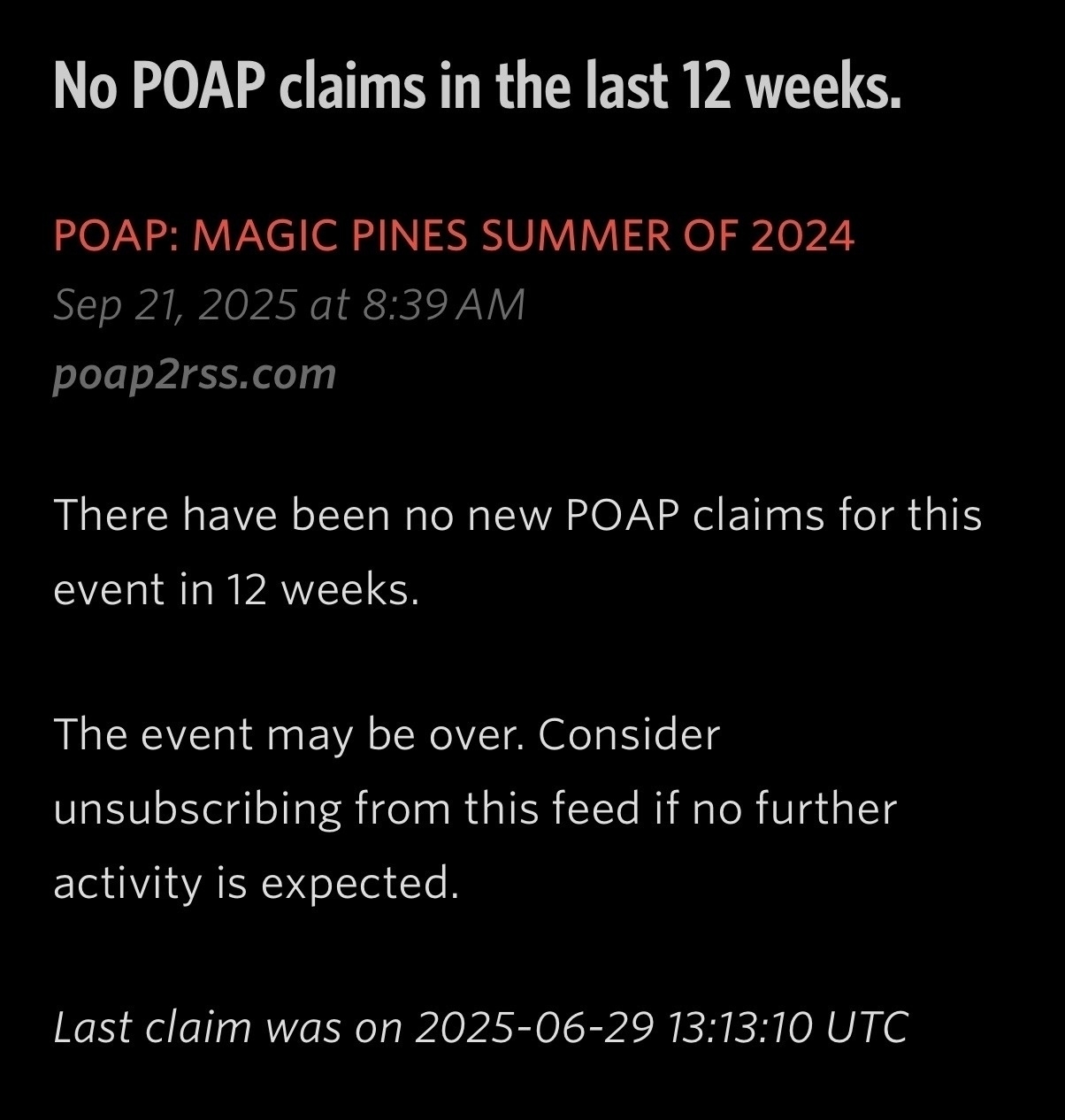

Saw this item in my RSS feed tonight. I had forgot about this feature in POAP2RSS. It worked as intended and I unsubscribed from the this event feed.

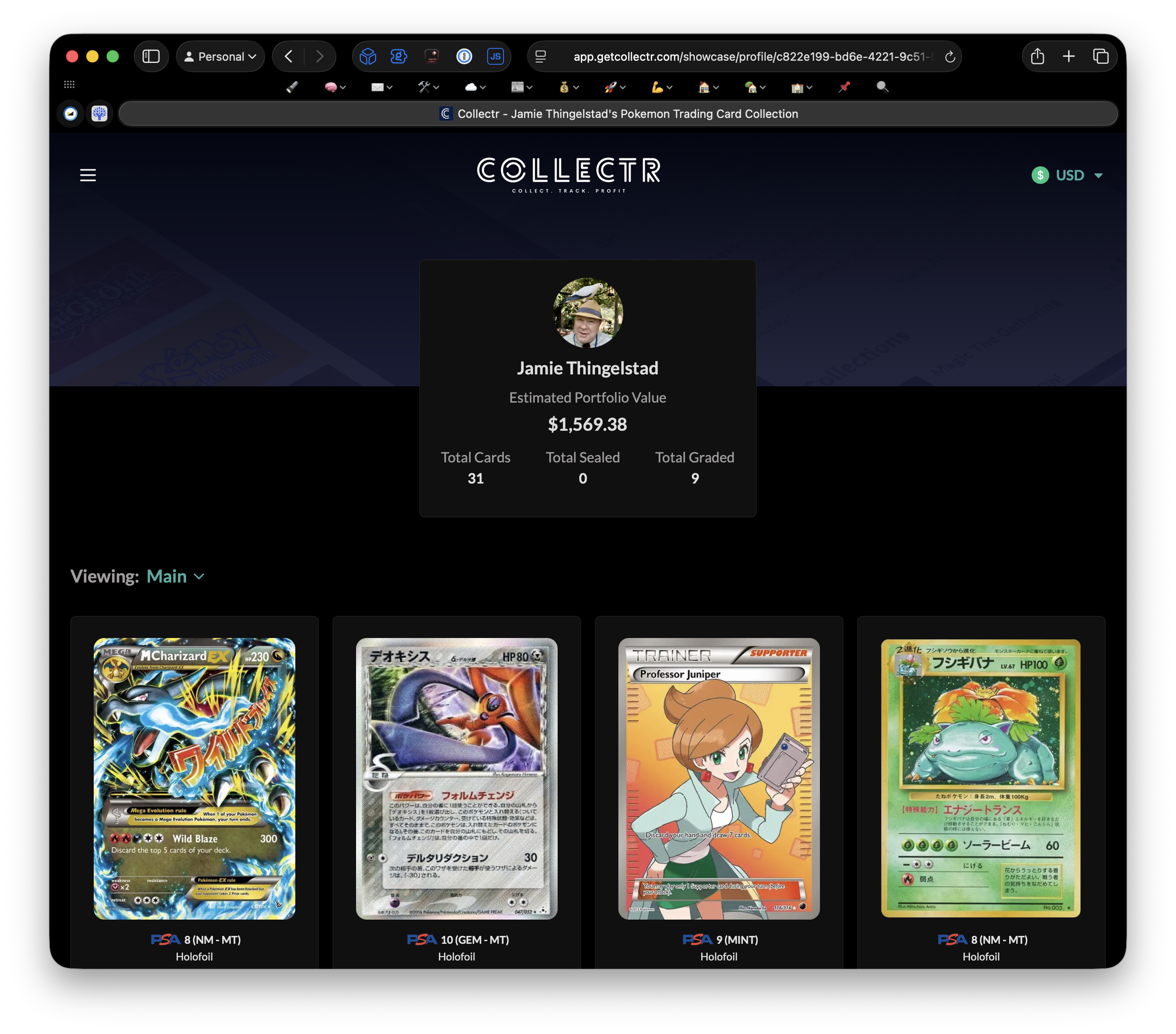

My Pokémon Collection

With some help from Tyler I got my Collectr profile accurate with my focused Pokémon collection. I have fun exploring Pokémon with Tyler and I have three things I collect:

- Perrserker is the closest thing to a Nordic Pokémon with his Viking helmet. I love Iceland and my DNA traces back predominantly to the Nordics so I feel a kinship to Perrserker. I’m collecting every Perrserker card there is and will have them all shortly. Perrserker evolves from Meowth and I have picked up a couple of those but am not collecting them.

- Alakazam is technically psychic but I also think he is sort of magical and when I used to play Dungeons & Dragons I always played a Wizard — so I’m also collecting this one. There are a ton of Alakazam cards! I may grab a Kadabra or Abra as part of the evolutions if they look cool.

- Lastly any graded Pokemon cards that I just think are really cool looking.

I just have 31 cards so far but I like the collection and having specific goals like this makes it a lot more fun. If only Collectr published an RSS feed on these profiles.

We had the stucco redashed on our house which gave us an opportunity to put a new color on it. We wanted to lighten it up and I’m very happy with the result.

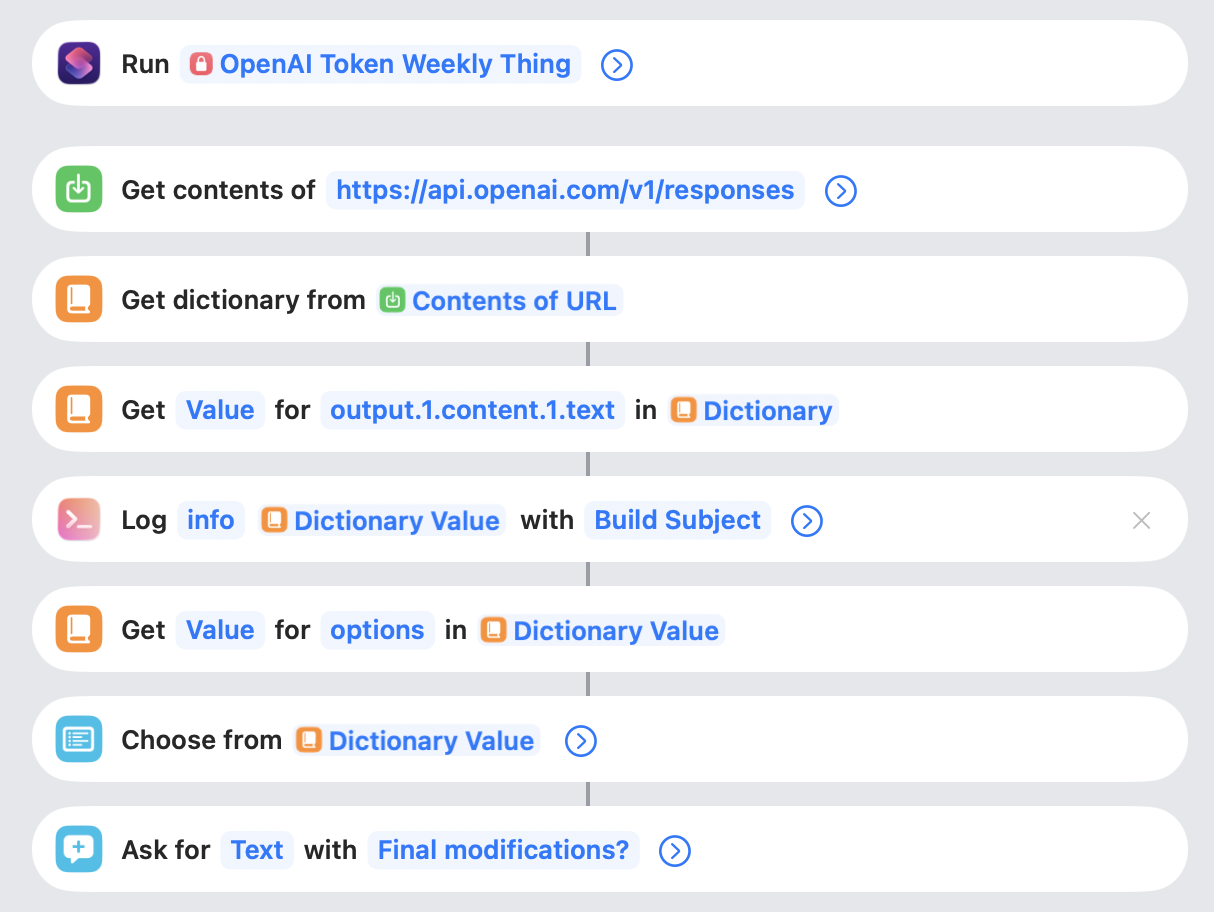

Use Apple Intelligence Models in Shortcuts

Shortcuts on OS 26 got a big new feature with the ability to use Apple Intelligence models directly. I’ve already had a taste of this by using OpenAI API calls in Shortcuts to add LLM capabilities. You can see how I’m using AI in the Weekly Thing for some examples. Accessing LLM capabilities from Shortcuts is a very powerful capability for various automations. I love how easy this now is with Apple Intelligence.

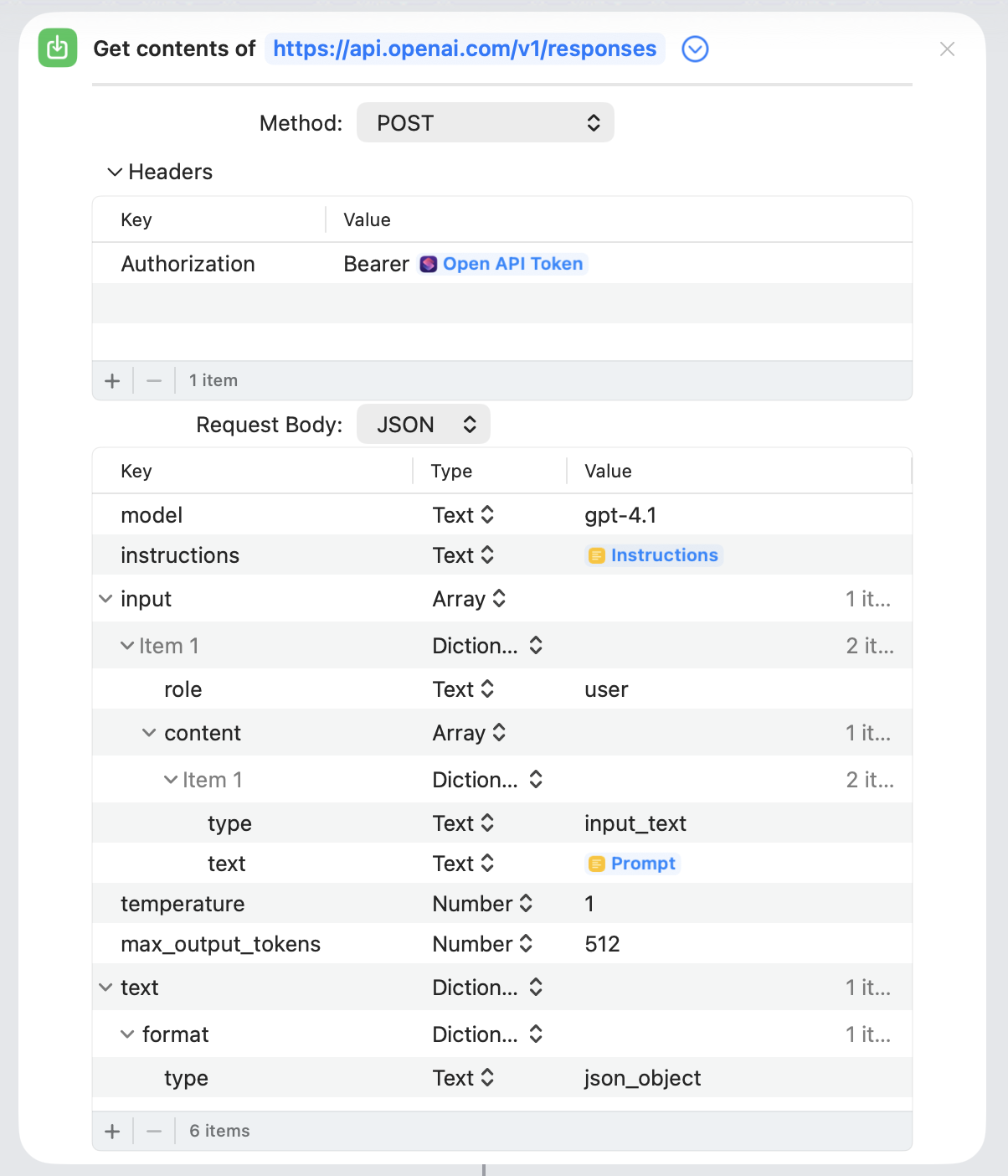

To compare, this is how I did it with direct calls to the OpenAI API.

There is a lot of fussy stuff to do to get keys, pass dictionaries around, get the specific values, etc. And actually this is hiding the hardest of it all. If you expand that Get Contents of URL action you’ll see this.

No way anyone without programming background is going to do this successfully. On top of it, my method for doing this is really brittle and prone to errors. I’m not catching all the possible API responses and if there is a problem it will just bail.

I’m also just kind of hoping that the response is JSON and I can marshal it into a variable. It works, but the prompt has to be right and you’ll see I’m handling that in the API call.

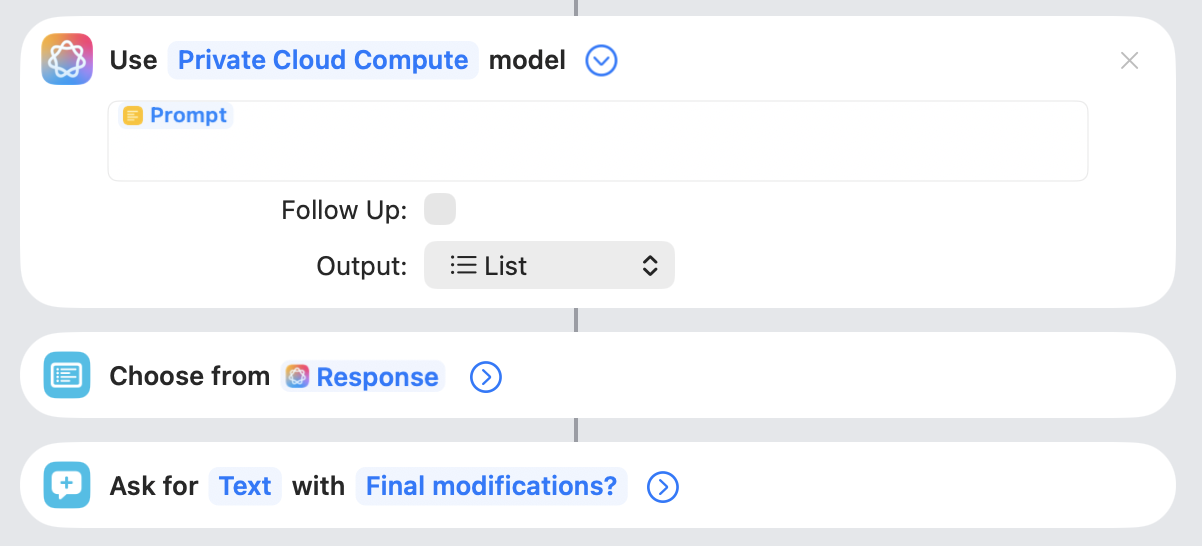

So, how about with Apple Intelligence and the built-in integration? Night and day difference.

Of course it is easier but it is so much easier. And one of the big wins is the output format. You can just tell it what you would like to get back. This avoids a ton of prompt engineering and parsing.

The only thing I lose with all this complexity is the ability to do a system message. For all of my use cases this hasn’t mattered at all. I just merged the system message into the prompt.

This is so easy I would encourage a lot of experimentation to pull AI into your automation.

Spent the morning ordering materials for our Things 4 Good Fall Fundraiser and candle scents and vessels are more “out of stock” than I’ve ever seen. Luckily I have some vessel inventory, but not enough. Might be a scramble this year. I wonder if Makesy inventory issues are tariff related? 😬

Disappointing 3-0 loss for MN United to Chicago Fire tonight. So many fouls. So many yellow cards. Nothing happening for the Loons at all tonight. Horrible game for end of season with playoffs clinched. ⚽️

Downtown for The Dakota’s 40th Anniversary Block Party. Awesome event. Tina Schlieske on stage right now! 🎶

We were in the area and finally got to visit Kyiv Cakes in Lakeville today. I’ve wanted to come here for a while. They have a broad menu of Ukrainian as well as traditional baked goods. We got a slice of Honey Cake with raspberries and it was light and delicious! Recommended. 🇺🇦

Lakeville Art Festival

We found ourselves with some open time this weekend and decided to check out the Lakeville Art Festival this morning. We’ve been to many art fairs but this was our first time to this particular one and we thought it was great. They had a great selection of art from artists in Minnesota and connected states. It was setup very nicely on grass instead of walking on blacktop.

It was great that they sectioned a stage with music and food into an entirely adjacent area with a good amount of picnic tables. It wasn’t hard to find a spot to sit a delightful lunch of Pizzeria 201 from Montgomery, MN (we drove there once to have their pizza) followed by some HomeTown Creamery ice cream. We also brought home some Groveland Confections chocolate.

Overall it had a nice and relaxed vibe.

Some artists that caught our eyes:

- Tin Cat Studio

- Amanda Pearson

- Pleasant Street Pottery

- Dan Wiemer

- Mya Austin

- Platypus Builds: We very nearly came home with a super cool lamp made entirely from wood.

- Reiko Uchytil

- Barret Lee

- Wenwen Liao

- Shane Anderson: Very “guy art” but I dug the concept and he had a bison painting that was interesting.

- Tyler Maddaus: His bison painting caught my eye.

- Burly Babe Woodworking

- Noah Sanders: We very nearly came home with one of his fox paintings. It may still happen.

- Ed Lefto: We purchased one of his birdhouses. (the URL is there, but no website)