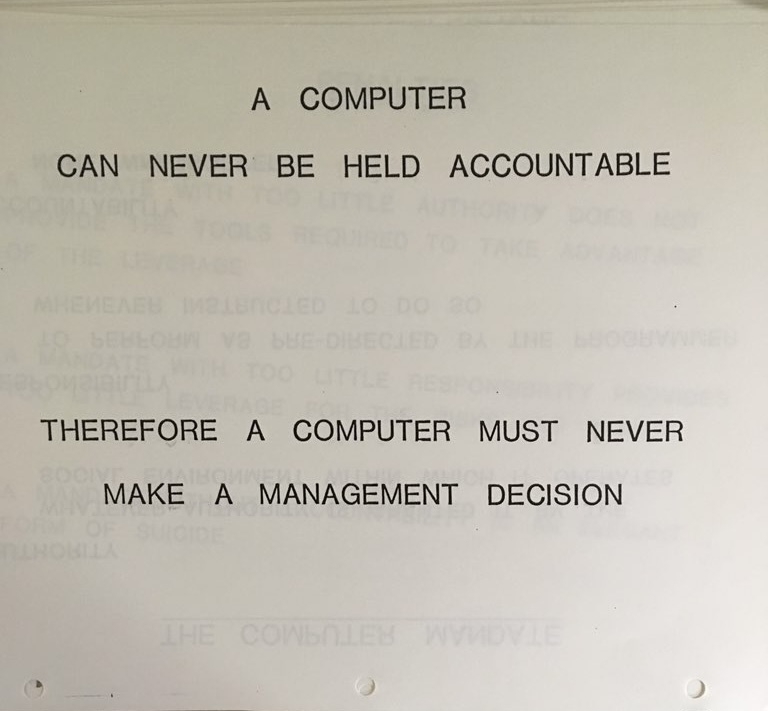

A computer can never be held accountable

Simon Willison shared this excerpt from an IBM training manual on his blog recently.

Willison did some research on this and tracked it down to a training manual from 1979.

I’m reading this 46 years later and it hits very different in our modern age of AI. Does AI make this statement any more or less true? I don’t think so.

The thing it has me thinking about equally as much though is thinking about places where people may be attempting to make AI appear to be accountable.

If there is no way to hold AI accountable, is attempting to make it appear accountable a way of eschewing or sidestepping accountability?